Your first environment

Let Ogre create a fully-functional environment to run your code out of the box.

Using the Ogre Chrome Extension

Start your journey by following the steps in this tutorial published in our blog:

The tutorial above delivers you a fully-functional environment, containing all the python dependencies necessary to run your code.

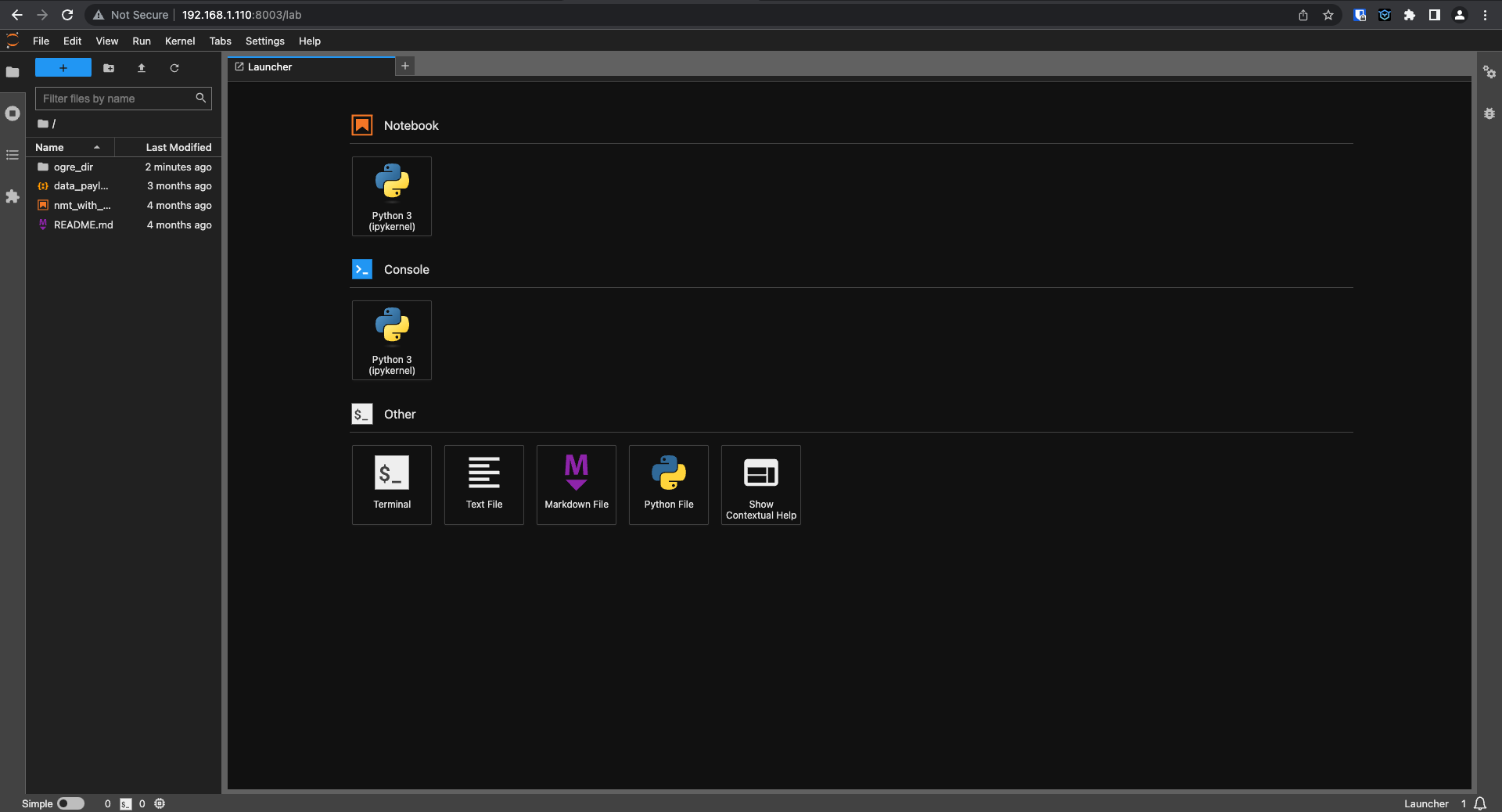

The environment is currently based on a Jupyter Lab interface (a VS Code interface in currently in beta test and will be available soon). It looks like this:

Figure 1: The Ogre environment, based on Jupyter Lab.

Figure 1: The Ogre environment, based on Jupyter Lab.

As in any Jupyter Lab-based application, you can access the code base, run terminal commands, create notebooks etc. What sets Ogre appart is that it automatically identifies and installs the python dependencies necessary to run your code.

Developers can rely on Ogre to build a clean coding and runtime environment, containing all the python dependencies, isolated from the host's environment, that guarantees their code will run.

Using the Ogre CLI

The CLI enables the user to customize how ogre builds and deploys the environment. It enables the user to:

-

Modify specific deployment parameters that are not accessible from the extension

-

Deploy local projects, i.e., source code stored in a local folder, directly in the Ogre.run cloud. This is very handy for professionals who want to expose their project immediatelly without the need to create a repository on GitHub or GitLab.

Basic usage

After installation, one can simply go

Create a config file

This example will show how one can quickly create a fully functional environment for a public repository (repo) on GitHub. We will be using the following repos:

- Ogarantia/tensorflow-attention - https://github.com/Ogarantia/tensorflow-attention: simple example of the attention mechanism using Tensorflow. It consists of a single jupyter notebook that shows how to train and deploy a neural network built with the attention mechanism.

- andrewssobral/llama-webapp - https://github.com/andrewssobral/llama-webapp: Gradio web interface to interact with Meta’s LLaMA models.